Dmitry Kryuk <hookmax@gmail.com>

Creation Date: May 16, 2014

Last updated: May 21, 2014

Version: 1.0

Overview

Workflow

EPM Automation

Export BRs from Planning repository

Create dummy forms

Load the package with LCM

Forms Extract

Generate LoadRunner scripts based on tokenized LoadRunner blueprint.

ALM Performance Center Steps

Upload VuGen scripts

Copy test scenario blueprint.

Replace default test script in copied scenario.

Update SLAs

Update the number of virtual users.

Total number of Vusers per script.

Geographic distribution.

Copy Test Set Blueprint.

Add test from steps 7-10 to copied test set

Execute the test set.

Overview

Let’s review the workflow of performance testing with LoadRunner/ALM Performance Center.

We focus on testing of two central object types of Hyperion Planning applications: web forms and Business Rules.

We use a LoadRunner script blueprint that simulates the following actions:

- Workspace authentication

- Opens application

- Opens webform

- Saves web form

To test a business rule with this blueprint, we would have to associate BR with a web form, and define a web forms so that BR would run “On Save”, and run-time-prompts (RTPs) are not shown.

Business rules may be associated differently with the webform in tested application itself. Other usage scenarios include:

- BR is triggered from “Business Rules” interface, and is not associated with any webform.

- BR is associated with a web form via menus.

- BR uses RTPs, but user is required to input them manually.

For those cases we need to create a dummy web form for each BR. We do not check “Use Members on Form” option since members on a dummy web form are irrelevant for a specific business rule. We rather prefer the business rule to use a default variable value. Obviously, this situation is not ideal, since data can be dispersed in such a way that a particular BR will not run on a representative data set.

Hence from the performance testing perspective we would prefer if BRs have RTPs, are associated with with real web forms, and run when the form is saved. BRs that don’t use RTPs can be tested with dummy web forms.

Workflow

EPM Automation

The diagram below describes the steps we need to perform in order to automate performance testing with third party tool like LoadRunner.

Export BRs from Planning repository

See sample SQL script in Appendix.

Create dummy forms

- Create one dummy web form per application

- Export the web form with LCM

- Tokenize the XML file of a webform (replace values like plan type and Business Rule name with tokens). Check example of tokenized LCM form in Appendix.

- Execute a script that generates XML files based on a tokenized template, and prepares LCM package to be loaded into test application. I use a python script that takes the list of BRs and a tokenized forms as parameters.

Load the package with LCM

Forms Extract

Now all our business rules are associated with a web form that will trigger the rule. Once LCM package is loaded we can extract webforms with their IDs from Planning repository. We will need those IDs in subsequent steps in order to create test scripts.

Generate LoadRunner scripts based on tokenized LoadRunner blueprint.

ALM Performance Center Steps

The diagram below describes the steps needed to prepare ALM objects for testing.

Upload VuGen scripts

We can now upload LoadRunner scripts to ALM Performance Center. Upload itself is a manual and time-consuming process. Unfortunately ALM interface does not allow bulk uploads, you have to upload 5 scripts each time.

After test script was uploaded it needs to be associated with test scenario. Test scenario includes the following information:

- Test scripts to run

- Load generators to use

- WAN emulation configuration (Shunra)

- Number of users for each test

- Configuration of how the load builds up

- Duration of the test

- SLAs

- Monitoring

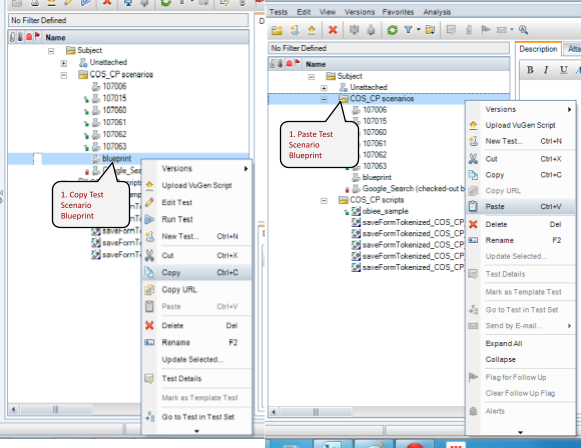

Copy test scenario blueprint.

We use test scenario blueprint as a starting point. After copuing the blueprint we need to rename it. The name of a new test scenario is a web form ID being tested.

Replace default test script in copied scenario.

Test scenario copy should be modified to reflect characteristics of the tested Business Rule. The first item to update is test script names in workload interface. This needs to be updated even when an existing test script was uploaded. When ALM overwrites the existing script it deletes the old script and all objects referencing the old script become invalid. Then it uploads the new script, and creates new records in the repository. Hence all objects referencing the old script need to be updated.

Update SLAs

Existing SLAs become invalid once we updated test scripts in Workload interface. So we’ll need to delete existing and create a new SLA. In our case SLAs are defined based on 90th percentile of execution time per transaction. The following transactions are available:

- saveForm_Transaction. Allows monitoring of BR execution time. When we test business rule associated to dummy web form this is the only transaction we are interested in.

- OpenWebForm: needs to be defined when we test web form retrieval.

- EPM_Login and OpenPlanningApp: allows monitoring of the login time or opening an application. We do not define SLA for these action when we test web forms or BRs. We do want to know how long it takes to login or to open the application, but that behavior is not related to a specific business rule. We test those transactions separately.

We can see which transaction need to be defined from script name. Our script can test either form, or BR, or both. Lets take one of the scripts for example: saveFormTokenized_COS_CP_BR_60415.zip. Its name consists of the application name (COS_CP), tested object type (BR), form ID. If object type is BR we define only saveForm_Transaction. If object type is FORM we define only OpenWebForm transaction. If object type is BOTH, we test real application web form with assigned BR that runs on save and hides prompts. In this case we define both saveForm_Transaction and OpenWebForm SLAs.

To define SLA we use average execution time when the system is at rest as a benchmark. We assume that performance degradation of 50% during peak load is acceptable. So, if the benchmark time is 10 sec to run the Business Rule, in the SLA we define 15 sec for the 90th percentile. Make sure the threshold time and transaction are defined correctly on the last page. Sometimes ALM’s interface does not work as expected.

Update the number of virtual users.

To update Vusers we need to calculate two parameters.

Total number of Vusers per script.

Total number of Vusers is calculated based on weekly execution frequency. Weekly frequency is provided by the developer/application lead, and calculated as “Number of Users”*”40 hours”*”Number of executions per user during peak hour”. If BR is executed by 30 users every 5 minutes during peak hour, its frequency is 14400/week.

Obviously we will not run each tests for 1 week, so we’ll need to scale the frequency based on benchmark execution time. If, for example the benchmark time is 30 sec, our SLA would be 45 sec (see SLA section). We could run a test with peak load for 12 min. If scheduled sequentially (with a single user: next iteration starts with the same user when previous iteration finished) we could execute BR 16 times (12*60/45). We need to execute BR 72 times however (14400/40/(60/12)). Hence, we need more than 1 user. Specifically, we’ll need at least 4.5 users (72/16). To be on the safe side we’ll add extra 20% to this ratio and will round up. Hence for this BR we’ll end up with 6 VUsers. This can be expressed as

The last statement says that test duration must be at least 10 min and at least double benchmark time.

Geographic distribution.

In the previous paragraph we calculated total number of VUsers. Now we need to distribute them across different geographies. The same business rule can be run by different communities around the globe. The conditions for those communities can be very different in terms of network latency and bandwidth. The blueprint contains 4 main groups for which WAN emulation parameters already preset. What needs to be updated is the number of users for each group. The total number of Vusers in the blueprint is 100. So the default number represents percentages, with which users are distributed across geographical areas. You can keep percentages the same, or you use different ones if there is a business justification.

To adjust the users select the group, update number of Vusers for a particular geography, and set test duration. Make sure that there is a period after initial load buildup when all groups run in parallel.

Copy Test Set Blueprint.

Add test from steps 7-10 to copied test set

Execute the test set.

Appendix

Get List of BRs

//To pull business-rules that are not [directly associated to forms, run on save, hide prompts], and not attached to PERF TESTING forms. In other words those, for which we need to create dummy forms.

with dt as (

SELECT

t1.OBJECT_ID FORMID

,t1.OBJECT_NAME FORMNAME

,t3.OBJECT_ID CALCID

,t3.OBJECT_NAME CALCNAME

,t2.RUN_ON_LOAD

,t2.RUN_ON_SAVE

,t2.HIDE_PROMPT

FROM

HSP_OBJECT t1

,HSP_FORM_CALCS t2

,HSP_OBJECT t3

--,HSP_CALC_MGR_RULES t4

where

t1.OBJECT_TYPE=7

and t3.OBJECT_TYPE=115

and t1.OBJECT_ID=t2.FORM_ID

--and t2.CALC_ID=t4.ID

and upper(t2.CALC_NAME)=upper(t3.OBJECT_NAME)

)

,dt2 as (

select

FORMID,FORMNAME,CALCID,CALCNAME

,RUN_ON_LOAD

,RUN_ON_SAVE

,HIDE_PROMPT

from dt

where 1=1

and FORMNAME not like 'PT%'

and RUN_ON_SAVE=1

and HIDE_PROMPT=1

order by FORMNAME)

,dt3 as (

SELECT t1.ID,

substr(

dbms_lob.substr(t1.BODY,200, 1),

instr(dbms_lob.substr(t1.BODY,200, 1),'name="')+6,

instr(dbms_lob.substr(t1.BODY,200, 1),'product="')-instr(dbms_lob.substr(t1.BODY,200, 1),'name="')-8

) BRNAME

,t1.LOCATION_SUB_TYPE

,t2.PLAN_TYPE

,substr(

dbms_lob.substr(t1.BODY,200, 1),

instr(dbms_lob.substr(t1.BODY,200, 1),'<property name="application">')+29,

instr(dbms_lob.substr(t1.BODY,200, 1),'</property>')-instr(dbms_lob.substr(t1.BODY,200, 1),'<property name="application">')-29

) APPNAME

FROM

HSP_CALC_MGR_RULES t1

,HSP_PLAN_TYPE t2

where t1.LOCATION_SUB_TYPE=t2.TYPE_NAME)

select

dt3.ID

,dt3.BRNAME

,dt3.LOCATION_SUB_TYPE

,dt3.PLAN_TYPE

,dt3.APPNAME

from dt3

where dt3.BRNAME not in (select CALCNAME from DT2);

No comments:

Post a Comment